Shorts

AI's bewildering rate of change

11 July 2024

If you have been bewildered by the rate of change in AI, you aren't alone. In 2021, AI was only able to correctly solve around 5% of the problems in the MATH benchmark. The results? AI was able to achieve a 50% pass rate in 2022, crushing the predicted result. Today AI is successfully solving over 90% of the problems correctly

Dogfooding: The Startup Cheatcode

08 July 2024

Dogfooding is a cheat code for startups, but unfortunately, it is impractical for most. "Dogfooding" is when a software company uses its own product internally. It has the magical effect of creating deep user empathy throughout your team. When your user's problems are your engineer's problems, you tend to see issues resolved quickly and optimally, no matter how small the concern. A great example of this was GitHub's early days. GitHub didn't have to create focus groups or customer product councils; they just figured out what they were missing most from the product and built it. If you are building an email client, it is easy to see how a company can mandate its use, but what if you write software to help shipping companies optimize their routes? Not quite as easy. Unfortunately, this dogfooding is beyond the reach of many companies, but if you can tap it, you must. I practice what I preach here. I chose my tax and accounting solution, Lettuce Financial, after learning that their team is a group of 1099ers who all use Lettuce themselves. More mature options were available to me, but I've seen what happens when you align incentives: magic. 🚀🚀🚀

How do startups win over users?

01 July 2024

How do startups win over users? They do the right thing. If you aren't aware, a malware company recently purchased the Github account and website for the Polyfill Javascript library. Polyfill is an old library that helped sites achieve multi-browser compatibility. Over 100,000 sites still use it. Unfortunately, many sites that leverage this library are unaware of this issue. So what did Cloudflare do? For all of their paid and free-tier customers, they are now redirecting all requests for the malware-infected version to their own safe, malware-free version. They did the right thing. Which is why I am a happy, paying customer.

Sign of the times: ChatGPT mistake cost our startup more than $10,000

27 June 2024

If you've been in the tech industry for a while, you've read more than your fair share of "Junior engineer dropped our production database" posts. Recently spotted in the wild is a new flavor of this HackerNews favorite: ChatGPT did it. So what happened? ⇢ Migrated their stack from NextJS to Python/FastAPI with the help of ChatGPT ⇢ Started hearing that users couldn't subscribe anymore ⇢ Finally discovered that the issue was a hardcoded UIDs leading to collisions All because they trusted the code that ChatGPT spit out.

The responsibilities of the startup CTO

25 June 2024

⇢ Work across the stack to develop the product for market ⇢ Present the technology vision to your board and your investors ⇢ Architect the stack to scale with continued growth ⇢ Review developer's pull requests to ensure code quality ⇢ Recruit, evaluate, and onboard the engineering team ⇢ Develop and communicate best practices for the technology team ⇢ Enforce testing practices that minimize regressions and accelerate time-to-market measures ⇢ Track and manage technical debt ⇢ Ensure the development processes and technology meet security and regulatory controls ⇢ Develop a strategic technology vision for the company ⇢ Demo and evangelize the technology to prospective customers and partners ⇢ Manage cloud, partner, and technology spending to reduce costs ⇢ Successfully partner with Product, People, and GTM functions ⇢ Produce thought leadership to aid in recruiting and to develop the company brand ⇢ Stay abreast of the ever-changing technology landscape 😅 The truth is that no individual can do all of these things well. And CEOs rarely know what they need out of a CTO, let alone what they have...

Zoom CEO envisions AI deepfakes attending meetings in your place

12 June 2024

"Let’s say the team is waiting for the CEO to make a decision or maybe some meaningful conversation, my digital twin really can represent me and also can be part of the decision-making process," Replacing the CEO with an LLM? I can't think of a worse place to drop an LLM.

When GenAI prompting reaches its limits, continual learning steps in

11 June 2024

A recent blog post by Dosu's CEO, Devin Stein, sheds light on how they significantly increased accuracy without resorting to complex and error-prone prompt engineering. Instead, they leveraged user feedback and transformed it into few-shot examples for continual learning. Here's the gist of why this matters: ⇢ Dosu automates repetitive engineering tasks like labeling tickets and PRs, reducing interruptions for engineers. ⇢ By collecting user feedback and converting it into in-context learning examples, Dosu adapts continuously and maintains high accuracy. ⇢ Prompt engineering and fine-tuning come with downsides like complexity and data drift, which their approach avoids. ⇢ Their continual learning method is simple: they collect corrections, store them as examples, and use them during task execution to improve accuracy. The results? Dosu’s label accuracy jumped by over 30%. Definitely worth a read:

Primer on Generative AI

10 June 2024

Looking for a primer on generative AI that isn't already months out of date? William Brown just released a handbook aimed at helping technical folks navigate the ever-changing world of AI. This is a carefully curated roadmap filled with the best explainer links from around the web. ⇢ It organizes scattered yet valuable resources— blog posts, videos, and more—into a cohesive, textbook-like format. ⇢ It’s a living document, open to community input and updates as the field evolves. ⇢ Focused on those with some coding and high-school level math background Check it out 👇

What if AI doesn't displace jobs?

26 May 2024

What if AI doesn't displace jobs so much as level the playing ground? Will we see fewer jobs or, maybe, a compression in salaries? 🤔 Senior roles are paid higher because of their accumulated knowledge, but what happens when that knowledge can be accessed faster and leveraged more quickly in one's career? We might see: → Junior roles needing less support in onboarding and training → Mid-level roles increasing their impact and productivity → Senior roles spending less time supporting and advising

Prediction: Gen AI drives the adoption of more standardized technology

19 March 2024

Prediction: Gen AI drives the adoption of more standardized software architecture practices 1. Gen AI thrives on well-documented concepts and patterns 2. Teams that norm to these Gen AI-friendly patterns will see the most productivity gains 3. The bar to justify novel approaches will go up 4. We will see more consolidation in approaches Which startup is AI going to accelerate faster? 1️⃣ Mono-repo, Rails/Django, Postgres 2️⃣ Multi-repo, micro-services, custom testing tools, multiple data stores, multiple queues, etc, etc What do you think? 1️⃣ or 2️⃣? I know which one I think GenAI will automate the heck out of ...

The future for developers named Devin

13 March 2024

Everyone is wondering about the future of software engineering this week But I'm wondering about the future of developers named Devin 😂 Is there a support group formed yet? The bad jokes must be endless.

AI: Garbage in, garbage out

13 March 2024

AI solutions are data-hungry, but ... Garbage in 🗑️, garbage out 🗑️ Most companies fail to create well-curated knowledge centers. It was a problem before LLMs, but oh boy, it is going to be a bigger problem in the future. Investing in AI, but ignoring your data? 👎 👎 👎 Just talked to a startup that is solving this problem in a really novel way.

Demo: LLM Agent Augmented with Commercial Data

05 March 2024

LLM Agents + Commercial Data 🚀 How was it built? (OpenAI + LangChain + Streamlit ) ➡️ Demyst ➡️ ( Google Cloud, HouseCanary, CarsXE API, ... )

Representation engineering using control vectors

20 February 2024

Complex system prompts are often used to safeguard LLMs But they also can be subverted 👇 I recently learned about "Representation Engineering" using control vectors. These control vectors can be applied to a model at the time of inference to influence how the model responds to requests. In a post written by Theia Vogel, she explains how these control vectors could protect against jailbreaking techniques: "The whole point of a jailbreak is that you're adding more tokens to distract from, invert the effects of, or minimize the troublesome prompt. But a control vector is everywhere, on every token, always." This technique could result in a less subvertible agent. 👏👏👏 I highly recommend you read Theia's post

Agent frameworks introduce a new class of bug

15 February 2024

LLM Agents have introduced a new kind of bug to worry about 🐛 And it exists in a place that regularly gets neglected … Code comments ✍️ LLM Agents interoperate with Tools using prompts, which sometimes are stored in Python docstrings. The oft-neglected docstring just became a pivotal part of your application. What could go wrong? 🤔

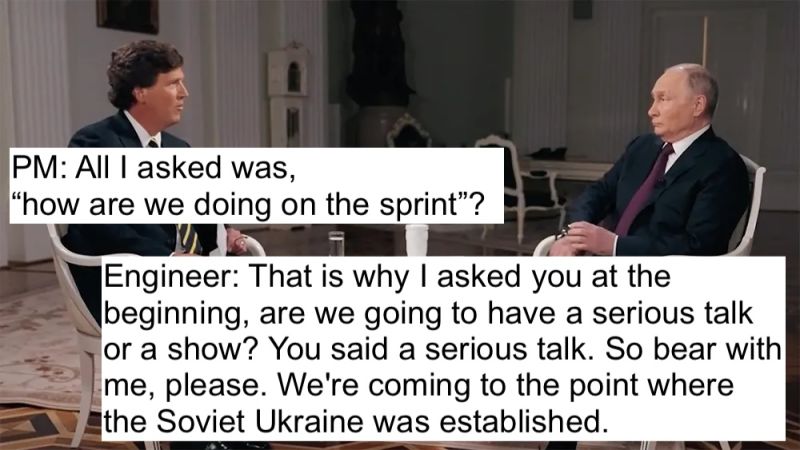

All I asked was how we were doing in the sprint

09 February 2024

What's the problem with microservices?

08 February 2024

Last year, researchers from Google published the paper "Towards Modern Development of Cloud Applications." In it, they describe five issues with microservices: 👎 It is slower. Serializing data is one of the more expensive things applications do, and Microservice architectures create a lot of serialization. 👎 It is harder to test. If every service is deployed independently, you have an ever-expanding set of testable, or more to-the-point, untested, permutations. 👎 It is more complicated to operate. Each service ends up with its build, test, and deploy steps, and while one can work towards a shared framework, skew is almost inevitable. 👎 It slows down change. Deployed APIs are less likely to be modified for fear of breaking production systems, leading to multiple versions of APIs that accomplish nearly the same function. 👎 It impacts application development. Engineers must consider all affected services when rolling out broad changes and create carefully orchestrated deployment plans. So what is their fix? ✅ Build a monolith, modularized into distinct components ✅ Use the runtime to assign logical components to physical processes (see Akka in the Scala world) ✅ Deploy everything together so that all services are constantly working with the same known version So, do you need microservices or monolith-as-microservices? The answer is _probably_ neither. Most teams and technologies benefit from a much simpler, monolithic model. You do not have Google-sized problems. But, of course, Google Cloud (and Amazon and Azure) would like you to think you do!

The future of LLMs is multi-agent systems

15 January 2024

"I think there's a growing sentiment these days that the future of LLMs and agents is going to be multi-agents. You're actually cool with living in a world where you're going to have different LLMs and agents specialize in different tasks, as long as they can communicate with each other. Either peer-to-peer or in some sort of hierarchy." "If we can actually create these good interfaces where people are building specialized LLMs and agents for these different tasks, and also design good communication protocols between them, then you're going to start to see greater capabilities come about, not just through a single kind of LLM or agent, but through the communication system as well." - @Jerry Liu

Leaders are in the details

15 January 2024

"And so I basically got involved in every single detail and I told leaders that leaders are in the details" "What everyone really wants is clarity." "What everyone really wants is to row in the same direction really quickly." - @Brian Chesky